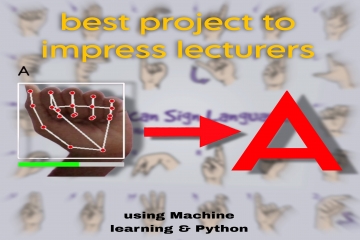

Enhance communication and inclusivity with this Real-Time Sign Language Translator! Designed to bridge the gap between sign language users and others, this project utilizes advanced machine learning and computer vision to detect and translate hand gestures into readable text subtitles. Key Features: Real-Time Video Translation: Processes hand gestures using a webcam and displays translated text subtitles. Interactive Controls: Start/stop translation, clear subtitles, backspace, add spaces, and even read out the subtitles aloud using text-to-speech. Threshold Adjustment: Customize the delay time for accurate character detection. Responsive Design: Clean and modern UI with video feed and control panel for seamless interaction. Dynamic Subtitle Display: Subtitles appear at the bottom of the video feed for easy readability. Tech Stack: Programming Language: Python 3.10.0 Frameworks: Flask for web app integration Libraries: MediaPipe for hand landmark detection OpenCV for video processing scikit-learn (version 1.2.0) for machine learning predictions pyttsx3 for text-to-speech conversion What’s Included: Complete source code Installation guide with detailed step-by-step instructions Pre-trained model for gesture recognition Ready-to-use Flask web application Perfect For: Students looking for a unique and impactful project Developers interested in accessibility tools Educators and researchers exploring machine learning and gesture recognition Note: This project requires Python 3.10.0 and scikit-learn 1.2.0 for compatibility.